We’ve seen it before in movies like Avatar, but it appears we can indeed control humanoids in real life and have them mirror our body movements remotely.

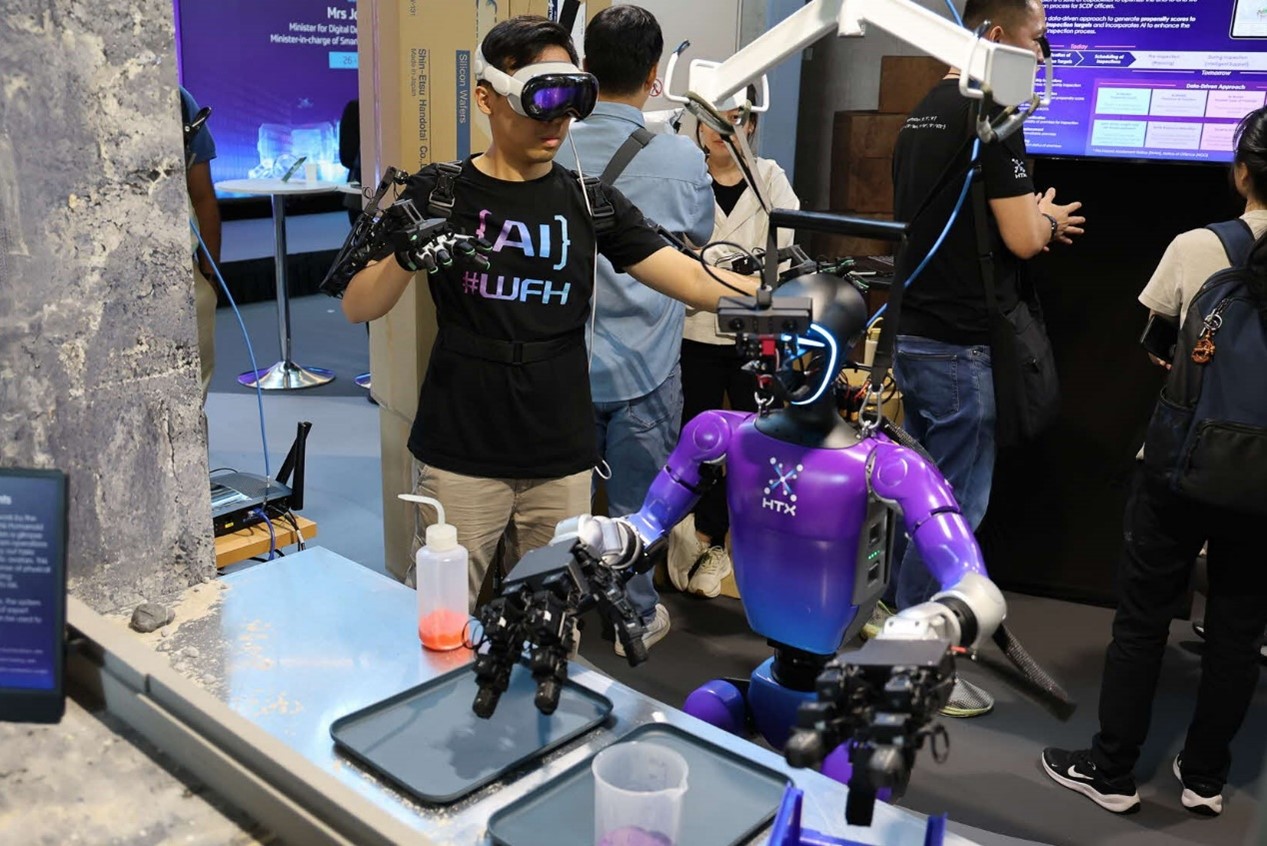

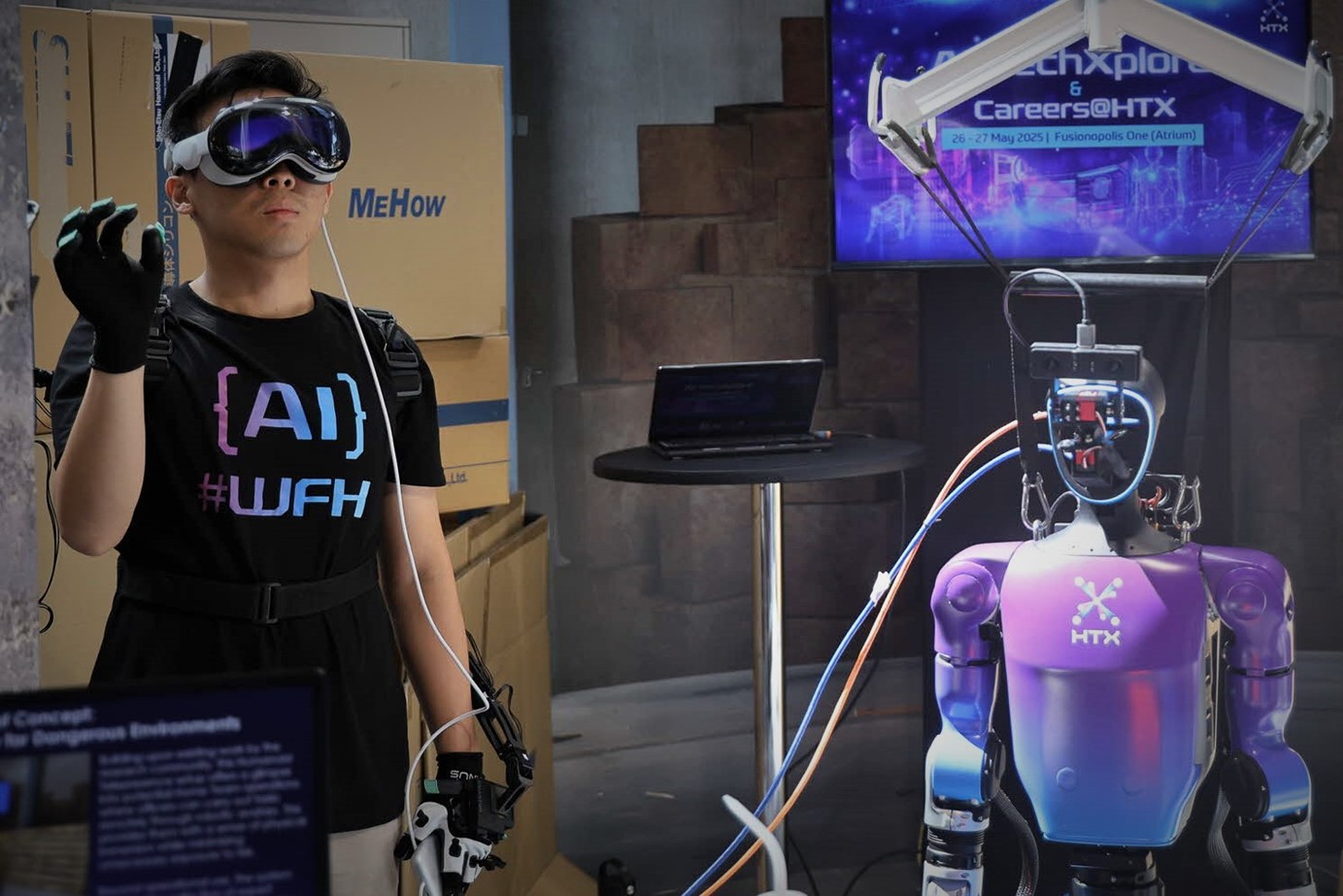

This much was proven when Sean Lim, an engineer at HTX’s Robotics, Automation & Unmanned Systems (RAUS) Centre of Expertise (CoE), controlled a humanoid robot using a custom-made exoskeleton at HTX’s AI TechXplore, which was held at Fusionopolis One from 26-27 May 2025.

In fact, this wasn’t the first time Sean was handling such tech. A few years ago, he put together a telepresence humanoid named STELER as part of his greenfield project when he was a part of HTX’s Associate Programme.

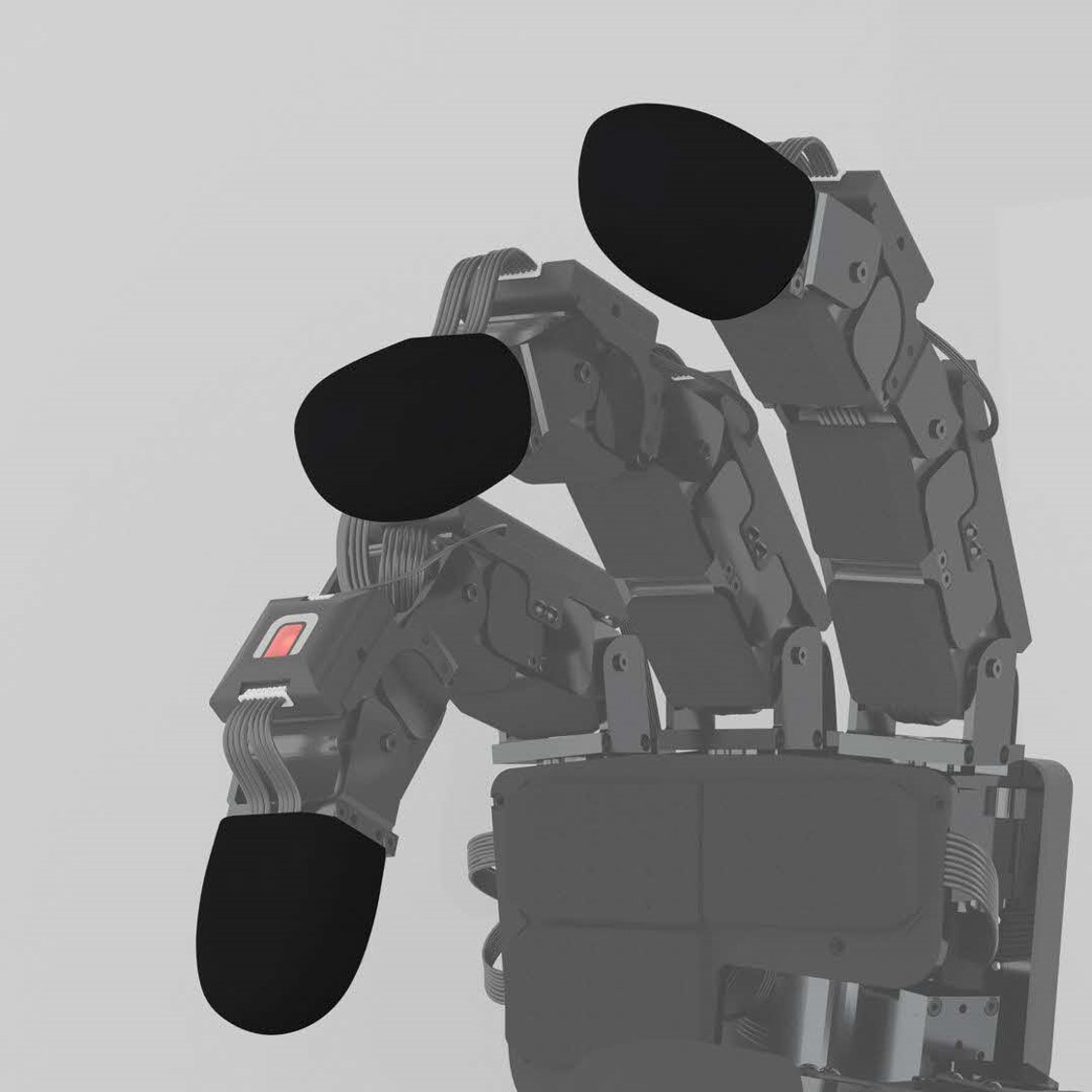

How STELER works:

Instagram reel by HTX Singapore (@htxsg). Opens in a new tab.

What is telepresence technology?

Any innovation that allows users to feel present in another location via video is technically considered telepresence tech.

Imagine attending a class and feeling as if you are right there in the lecture theatre, and moving around the class courtesy of the mobile telepresence robot you are controlling from your home.

Sean operating a humanoid robot using VR googles and an exoskeleton strapped to his back. (Photo: HTX/Law Yongwei)

Sean operating a humanoid robot using VR googles and an exoskeleton strapped to his back. (Photo: HTX/Law Yongwei)

Telepresence robots aren’t exactly new. In fact, Alexandra Hospital in Singapore had in 2020 been trialing the use of such robots for remote consultations. During the trial, a mobile robot would be dispatched to patients’ homes, allowing them to have their routine reviews with pharmacists.

Such tele-consultations are especially useful for patients who are homebound and have limited knowledge of operating digital devices.

In fact, this specific field has been garnering much attention in recent years thanks to the ANA Avatar XPRIZE, a multi-year competition that receives submissions on avatar systems from all over the world.

Sponsored by Japanese Airline All Nippon Airways (ANA), the competition was last won by Team NimbRo, which developed an immersive telepresence system that was made up of an anthropomorphic mobile robot.

In the context of public safety in Singapore, telepresence robots are expected to be ready for operational use by mid-2027, courtesy of HTX’s Home Team Humanoid Robotics Centre (H2RC), which will be launched in mid-2026.

The robots developed by H2RC will be focused on undertaking risky tasks such as firefighting, hazmat, and search and rescue operations, thus putting human officers out of harm’s way.

The next step in the evolution of telepresence operations, said Sean, would be making the entire experience so immersive it is akin to becoming one with the robot.

This would be made possible with the following three elements:

What is needed #1: Depth perception

One crucial element in creating a highly immersive telepresence experience, said Sean, is vision or, more specifically, vision that allows for depth perception, which refers to the ability to perceive the distance between objects.

The use of two cameras as eyes provide stereo vision. (Photo: Freepik)

The use of two cameras as eyes provide stereo vision. (Photo: Freepik)

To allow for depth perception in STELER, Sean placed not one but two cameras on the robot to achieve what is known as “stereo vision,” which is similar to how our eyes work.

“There is less depth perception when you’re viewing things through just one camera. It’s the same with our eyes. This is why people who are blind in one eye tend to have poorer depth perception,” he explained.

Here’s one experiment you can do to understand the importance of stereo vision: close one eye and try threading a needle. You’ll find that doing so is much easier when you have both eyes open.

Meanwhile, the operator will simply need to don a pair of VR goggles to experience stereo vision, though Sean pointed out that one challenge faced in this aspect is video latency. Delay between visual information and the vestibular system, he added, can make for a rather nauseating viewing experience.

What is needed #2: Mirroring human actions

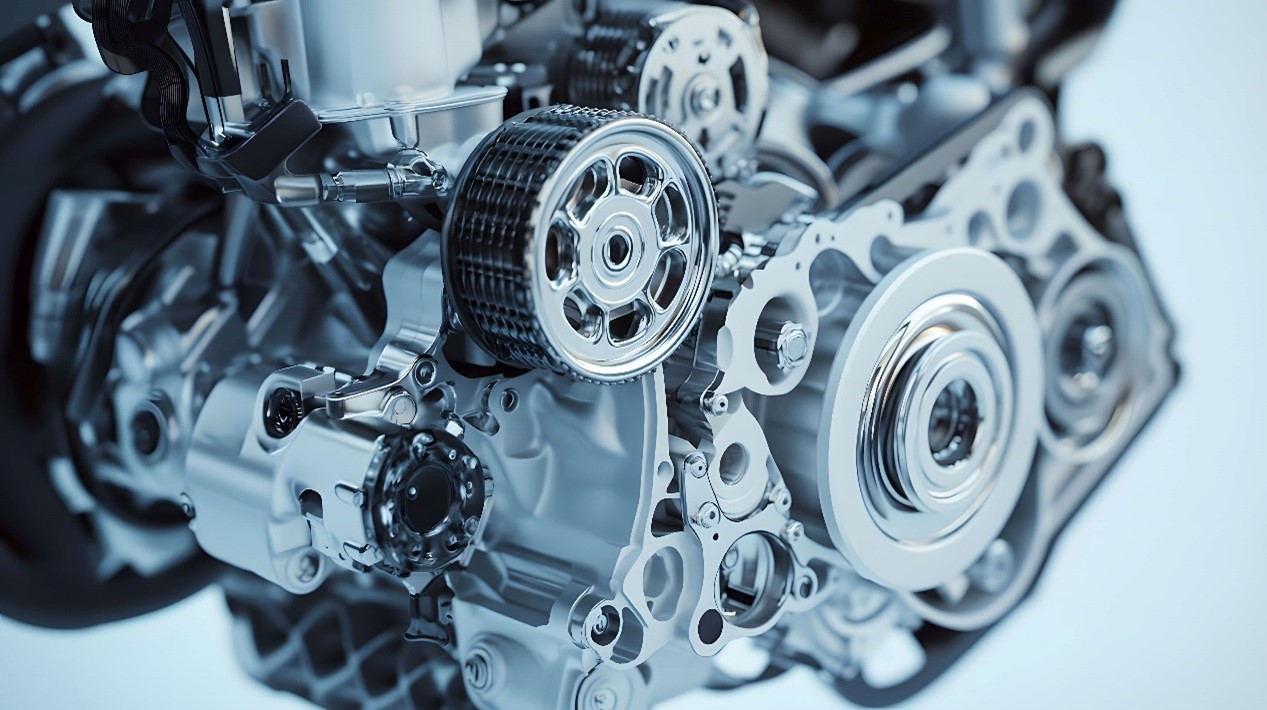

If you haven’t already noticed, the robots of today still can’t walk as fluidly as humans do. Most people would agree that even the world’s most realistic humanoid robots still move in a rather rigid and mechanical manner.

Replicating the dexterity humans have using motors is a tall task. (Photo: Freepik)

Replicating the dexterity humans have using motors is a tall task. (Photo: Freepik)

“In the past, many robots depended on what we call static stability to move. This means that at any point in time the robot’s centre of gravity is always directly above its point of contact on the ground. We humans, on the other hand, move using dynamic stability. In a sense, we’re always somewhat off balance and constantly tipping over,” explained Sean.

One reason robots of the past relied on static stability, he added, was simply because this mode of operation was easier to implement. But given how quickly technology has advanced over the years, robot manufacturers are producing robots that are dynamically stable.

Another limitation faced is the dexterity of robotic arms and fingers.

“To replicate the full range of motion of the human hand, a robotic hand would need an inordinate number of motors, but having so many motors means the hand is going to be incredibly heavy, which is obviously unfeasible,” said Sean.

Why are natural movements important? Because the closer robots can mimic our movements, the more accurate the translation of skills – meaning human operators can command the robot to move around and interact with the environment more effortlessly.

So, will robots be able to move as naturally as humans?

Sean believes it’s possible – we just need to be patient.

“We’re currently limited by hardware. But given how quickly it’s advancing, it’s only a matter of time before motors and actuators evolve to a stage that make natural movements possible,” he said.

What is needed #3: Haptic feedbackNow, you’re probably wondering why Home Team officers would need to be able to feel something through their avatar with the use of haptic feedback.

HaptX’s gloves allow users to feel virtual objects with their hands (Photo: HaptX)

HaptX’s gloves allow users to feel virtual objects with their hands (Photo: HaptX)

According to Sean, this capability would allow the operator to better tackle delicate tasks, such as unzipping a bag to check for explosives, through a robot.

“A traditional robotic arm can certainly pick up objects. But it cannot determine how much force to exert when doing so. Having such an ability is important because it would ensure that fragile objects aren’t crushed by the force of the grip,” he added.

“Imagine having to pick up a glass canister filled with highly toxic compounds. Using too much force would crack open the canister.”

Sean pointed out that one tech company currently leading the way in this field is HaptX, which leverages pneumatics (using gas or pressurised air in mechanical systems) to give operators a sense of feel, through special gloves featuring hundreds of actuators that provide force feedback to the fingers.

Why haptic feedback is important:

Of course, these gloves are meant for human use. Strapping them onto a robotic hand wouldn’t serve any purpose since the robot can’t feel the feedback.

In order to feel what the robot is touching, engineers would have to equip the robotic fingers with sensors that relay information back to the haptic gloves worn by the human operator.

One company making inroads in this field is XELA Robotics, which has created curved fingertip sensors for robots.

XELA Robotic’s tactile sensors for robotic hands (Photo: XELA Robotics)

XELA Robotic’s tactile sensors for robotic hands (Photo: XELA Robotics)

Tech giant Meta, too, looks to be getting into the game – it last year announced that it will be collaborating with Wonik Robotics and sensor firm GelSight to commercialise tactile sensors for artificial intelligence.

Integrating the sensors on the robot with the gloves used by the operator, however, would be a big challenge on its own, said Sean.

The use of telepresence robotics allows humans to tackle dangerous tasks like firefighting without exposure to risk. (Photo: HTX/Law Yongwei)

The use of telepresence robotics allows humans to tackle dangerous tasks like firefighting without exposure to risk. (Photo: HTX/Law Yongwei)

As you can see, the road to a highly immersive telepresence experience isn’t impossible, but it may be some time before it becomes a reality.

When it does come to fruition though, such tech has the potential to revolutionise the way many industries – not just the public safety sector – operate.

The world has already seen glimpses of its potential.

In 2020, the Model-T robot by Japanese firm Telexistence was used to restock drinks in a FamilyMart shop in Tokyo. Using a pair of VR goggles and handheld controllers, the person controlling the robot was situated several kilometres away.

In 2024, a Chinese surgeon situated in Rome carried out a prostate removal operation on a patient in Beijing with the use of robotic arms hooked up to a 5G mobile broadband network. This was hailed as the world’s first live transcontinental remote robotic surgery.