Intelligent robots, for the most part, have existed in the realm of science fiction and other popular culture universes. You may be familiar with the adorable extraterrestrial trash-collecting robot from its namesake Pixar film, Wall-E, or are perhaps equal parts awestruck and perturbed by the cyborg assassin played by Arnold Schwarzenegger in the Terminator franchise.

While those fictional characters may be wildly dissimilar in purpose and appearance, they share a common trait – the ability to dynamically interact with environments. This description denotes Embodied Artificial Intelligence (AI), which integrates AI into physical entities such as robots.

Such robots, according to Dr Joyce Lim, a lead engineer at HTX’s Robotics, Automation and Unmanned Systems Centre of Expertise (RAUS CoE), could very well become a reality in the not-too-distant future.

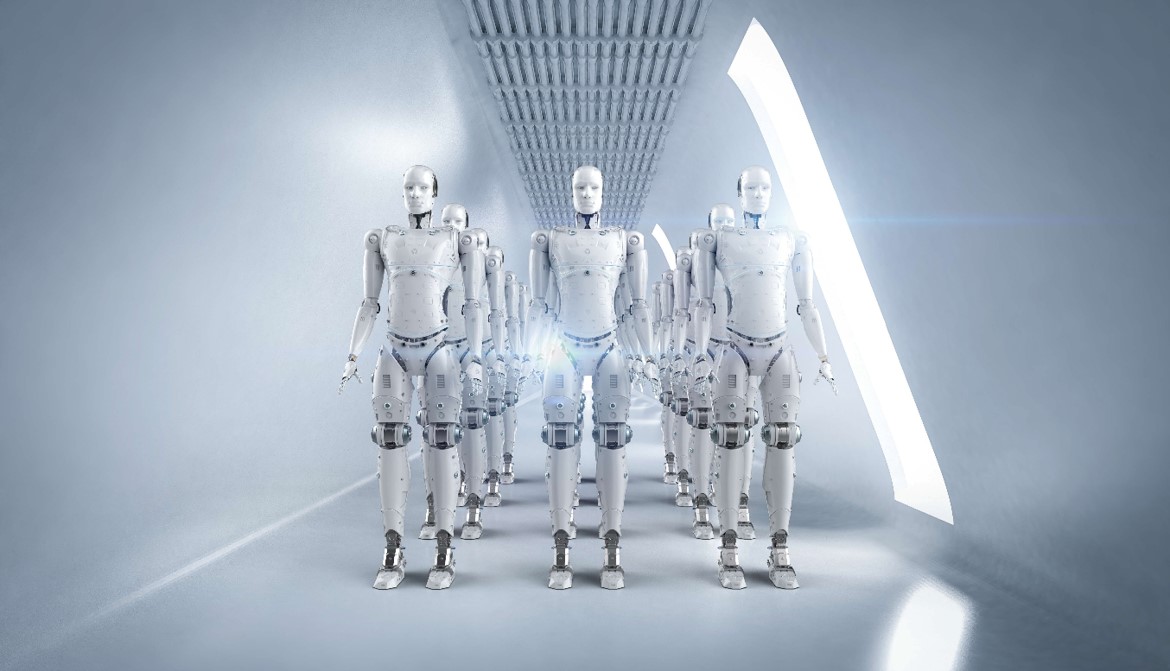

In fact, Joyce and her colleagues are already developing embodied robotic agents that “can move, reason and augment Home Team officers in dull, dangerous and dirty tasks”. Such robots were on show at HTX’s AI TechXplore event in end-May.

Humanoid robots were a crowd magnet at HTX’s AI TechXplore event in May. (Photo: HTX/Law Yong Wei)

Humanoid robots were a crowd magnet at HTX’s AI TechXplore event in May. (Photo: HTX/Law Yong Wei)

What makes Embodied AI so cutting-edge?

You may have come across the fully autonomous AI-enabled patrol robot that is already being deployed for traffic management purposes at Changi Airport. Joyce explained that while such technology may incorporate elements of AI to enable object detection, it is typically pre-programmed to behave in a certain manner.

An Embodied AI robot, on the other hand, would be capable of reasoning.

“If we train a model to detect vehicles, it won’t be able to pick up other items such as trolleys that have been left there for too long,” she said.

“An Embodied AI robot, however, would be able to do so as it actively learns, adapts and responds to its environment in real-time, thus adjusting its behaviour as it encounters new situations.”

RAUS engineers posing with the autonomous AI-enabled patrol robot they helped develop. (Photo: HTX)

RAUS engineers posing with the autonomous AI-enabled patrol robot they helped develop. (Photo: HTX)

Such capabilities, she added, positions Embodied AI as the next frontier of robotics.

“Unlike traditional robots that only follow a set of rules, Embodied AI systems integrate sensory feedback, which allows them to ‘see’,‘hear’, and ‘feel’ the world around them,” she explained.

Such technology has yet to materialise, but recent technological developments suggest that it is only a matter of time before it does.

UK startup Wayve, for example, has built foundation models that enable vehicles to sense and respond to traffic conditions through a combination of camera, GPS, Radar/LiDAR sensors and end-to-end AI.

Unlike Tesla’s autonomous driving systems, which rely on extensive real-world data collection and learning through vast fleet experience, Wayve’s Embodied AI system utilises a generalised, adaptive AI model that can dynamically adjust to new inputs, scenarios or environments it wasn’t explicitly trained for. This is made possible by the integration of advanced machine learning techniques like reinforcement learning, unsupervised learning, and transfer learning.

Today’s smart cars are poised to become even smarter with Embodied AI. (Photo: Freepik)

Today’s smart cars are poised to become even smarter with Embodied AI. (Photo: Freepik)

It’s going to take more than just GPT

According to Joyce, one common misconception many people have is that the key enabler of Embodied AI is large multi-modal models (LMMs) like GPT-4o from OpenAI.

Although Embodied AI systems do tap LMMs such as GPT to understand, generate and respond to complex language in real time, there is still the tall task of translating the digital output from AI models into actual actions.

She used the example of a camera-equipped embodied robotic agent built for the purpose of sorting canned drinks, and pointed out the complex engineering work that needs to be done.

“How do we estimate the position of the object from the robot and how do we instruct the robot to grasp it and place it in the correct position for sorting? How do we estimate the grasping so that we don’t crush the canned drinks? And how can we plan all these trajectories so that they are optimised?” she said.

“There are many challenges involved. You can’t just plonk GPT into a robot and expect it to think and act for itself.”

Creating a robot that can dynamically react to its surroundings and determine the appropriate force to exert when grasping different objects is no small feat.

Creating a robot that can dynamically react to its surroundings and determine the appropriate force to exert when grasping different objects is no small feat.

Presently, Joyce and her team are focusing on building more robust robotics planners and controllers, which translate the output from GPT step-by-step plan for a robot to reach its goal, and handles real-time adjustments needed to accurately follow that plan respectively.

The team is also working on visual language navigation, reinforcement learning, as well as modelling and simulation, which involves creating virtual environments that allow AI agents to learn new skills and practise interacting with the world safely and efficiently.

One project that Joyce and her team are currently working on is a fixed robotic arm capable of comprehending text commands to perform tasks such as moving an object of interest. Next, they plan to mount the robotic arm onto a (a four-legged) robot, with a view to develop a prototype that can be deployed for search and retrieval tasks.

Joyce acknowledged that there are numerous obstacles ahead. For instance, the seemingly simple task of opening a door still stumps many “smart” robots, given the varying mechanisms behind different types of doors. There is also the need to have more data, which is especially crucial for developing Embodied AI robots deployed to perform public safety tasks.

The act of opening a door may seem simple to most humans, but getting a robot to perform the same task can be quite a tall task. (Photo: Freepik)

The act of opening a door may seem simple to most humans, but getting a robot to perform the same task can be quite a tall task. (Photo: Freepik)

Challenges aside, HTX’s RAUS CoE is already a step ahead in elevating the Home Team’s Embodied AI capabilities by bringing in humanoid robots. Joyce said a humanoid robot will be much more capable than a quadruped, given that it has more degrees of freedom.

While her team is still exploring use cases for Embodied AI robots with Home Team Departments, she shared that their first order of business will be a feasibility study and data collection.

She added that humanoid robots have the advantage of height, compared to quadrupeds, and are thus better equipped to perform tasks such as checking of overhead compartments.

“The world is built for humans, so the humanoid robot is thus better suited for their tasks,” she reasoned.

Humanoids used for law enforcement isn’t a pipedream – it might just be a matter of time before such tech hits the streets.

Humanoids used for law enforcement isn’t a pipedream – it might just be a matter of time before such tech hits the streets.

She believes that it is just a matter of years before embodied robotic agents are deployed in public settings to support Home Team officers. In fact, HTX is now in the midst of building such a facility – the Home Team Humanoid Robotics Centre (H2RC), which will be focused on developing Embodied AI robots for public safety use cases. H2RC is expected to roll out such robots from 2029.

“This doesn’t mean that we’re replacing the officers, but rather augmenting their capabilities so that they have less exposure to hazards while protecting the country and its civilians,” she said.

“Imagine subduing a knife-wielding criminal using an intelligent robot that can think on its own. That’s not sci-fi. That’s the future we’re looking at.”